Sifakis, who once sported a great mustache but is now 72 and clean-shaven, mainly spoke about autonomous cars. He has great theory and deep thought to bring to the topic. Unfortunately he’s trying to set rules on a race that is already underway.

This is common in the world of Moore’s Law, where change happens faster than people can adapt to it. The press office here, for instance, has very nice networking cables, but all the reporters are using WiFi.

Sifakis’ work asks if we should trust self-driving cars.. Views vary. Some people distrust Tesla cars so much they block the chargers with giant pick-up trucks. Others go to sleep while rolling along freeways at 70 mph, with the autopilot on.

“Once you certify an aircraft as a product, you can’t update it,” Sifakis noted. It’s a key point. Boeing must have software fixes for the 737-Max plane run through testing and standards approvals before the plane returns to the air, because we must have absolute trust in those fixes before they go in.

For self-driving cars, the regulatory model is more lax. “Public authorities allow self-certification,” software is updated monthly, and manufacturers apply statistics to prove their designs work. It hasn’t caused a crash yet, thus it must not be capable of causing a crash.

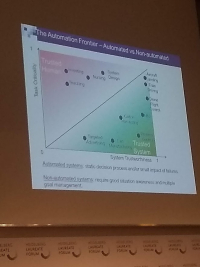

When should you trust a system? Sifakis offered two criteria. One is the degree of criticality, the risk that comes with failure, as with the airplane or (to take a less critical case) a toaster. The other is system trustworthiness, whether the system will behave as designed, its probability of failure.

Safakis brought a graph to illustrate, where trustworthiness rises with technology but distrust also rises with how critical the task is. Targeted ads are OK because while they’re not trusted they’re not critical. Self-driving cars may have a lot more trust built into the design, but are distrusted because failure can mean death.

There’s also a pyramid of knowledge truthfulness, ranging from facts and syllogisms at the bottom to mathematical truths at the top. So long as there are human-driven cars, self-driving cars must prove themselves to an incredibly high degree to be accepted. Once they become the norm, the need for trust will decline, since you can program trust into the machines.

“If we can solve the problem of self driving cars it is a huge step toward true artificial intelligence,” Safakis concluded, assuming human ignorance doesn’t get in the way. If makers of automated cars weren’t racing one another with drivers as guinea pigs, his work could be saving a lot of lives.