First. There was always a contradiction inside the nature of the company. It was a non-profit owning a for-profit. That had to resolve itself. Non-profits do what they do for humanity. For-profits do it for themselves. You can’t split the difference.

Second. Why is Microsoft trying to put this toothpaste back in the tube? Roger McNamee explained it all on CNBC.

UPDATE: Microsoft is said to have done just that, reinstalling Altman as CEO late on November 21. Whether that erases anyone’s memory of the week remains to be seen.

Artificial Intelligent Bullshit

Artificial Intelligent Bullshit

Microsoft didn’t really hand OpenAI $10 billion. They handed it $10 billion worth of Azure services. In exchange they got almost half the equity in the for-profit entity. What price they are “charging” for these services remains unknown. Were they sold at cost, or at retail? It makes a difference in terms of how much Microsoft really invested and how much the resulting company is worth. Since OpenAI was seeking new investment, it seems they needed cash. Maybe that $80-90 billion valuation was bogus from the off.

Third, and probably most important. The company’s advocates, like SPAC barker Chamath Palihapitiya, insist that OpenAI was creating “Artificial General Intelligence,” or AGI.

Trouble is there’s no such thing. Today’s Generative AI is software that uses a lot of database input to create various kinds of output. It’s limited as to topic, limited as to capability, limited all around. Generative AI is just a step-up from old-fashioned database Software as a Service, as sold by Salesforce, ServiceNow, and others.

ChatGPT does specific things with specific inputs. It’s not an artificial brain. Jeff Jarvis, who happened to be attending a conference on AI as all this went down, calls it “Artificial Intelligence Bullshit.” It’s a tool, an application. Geneative AI can’t tell you anything it doesn’t already know. It can’t make imaginative leaps.

Microsoft’s Role

Microsoft’s Role

That’s not to say Generative AI isn’t useful. It can help programmers by generating code off a description of what the code will do. Generative AI can help writers by knowing the rules of grammar. It can adapt to all the data you have about a patient, everything available about corporate resources (including people), and process that into an appointment, a staffing schedule, even a possible diagnosis. But AI is not a programmer. It’s not a writer, or a doctor. It’s software.

OpenAI hyped generative AI into what carnival barkers now called general intelligence. This was done to make money, to create regulatory capture, and to keep small groups (or open source foundations) out of the market.

This is all Microsoft’s problem now. That’s where OpenAI should have been all along. Satya Nadella needs to take responsibility for what ChatGPT does. Microsoft needs to take responsibility for its input, and for its performance. Most important, someone needs to shoulder the development costs, which given the overheated market for “AI Talent” are going to be inflated.

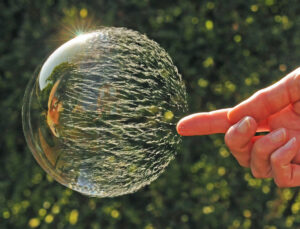

Unless, that is, someone wants to admit the truth and pop the AI bubble.

Artificial Intelligent Bullshit

Artificial Intelligent Bullshit Microsoft’s Role

Microsoft’s Role