AI optimists who are leading a trillion dollar arms race to replace the Internet got a big boost this week.

AI optimists who are leading a trillion dollar arms race to replace the Internet got a big boost this week.

Two of the three Nobel science prizes involved AI.

- Geoffrey Hinton (shown), a former Google executive who worked on the foundational machine learning principles behind today’s AI software, was a winner of the Physics prize. He quit Google last year, fearing the implications of today’s AI boom.

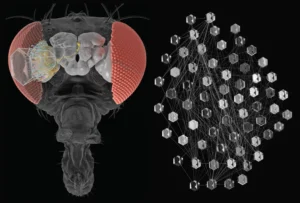

- Google DeepMind researchers who built the AlphaFold Protein Structure Database won the Chemistry Prize.

You can think of this as AI’s scientific coming out party. Jumper, one of the Chemistry winners, is just 39, and won 4 years after AlphaFold 2 was released.

It was just a decade ago when I was learning about college students using software to identify individual protein structures. AlphaFold 2 makes all these structures available.

What’s more important, to me, is that current neural nets are still Version 1.0 work. Neural nets remain brute force computing, mimicking a research process by plowing through all available data, rather than engaging in true creativity. It’s library work, which is still a database query. It’s not thinking.

What Hinton worked on was the process by which database research happens. What Jumper and Demis Hassibis worked on was an implementation of that process.

Hinton designed an operating system, Jumper and Hassibis wrote an application.

What Comes Next

When we say we’re in the early innings of AI, this and not Nvidia Blackwell chips is what we mean. Don’t be confused.